Hot, Hot iPhone Love (More Terrible Neuromarketing)

I hate being late to a party. You finally arrive after the festivities have begun and you know that your friends have already been there for hours, having a grand time doing what they do best. So it is with the latest neuromarketing debacle involving the New York Times and the pseudoscience that appeared on the op-ed page. All the best stuff has already been written.

Summary:

A branding consultant (Martin Lindstrom) commissions a neuromarketing company (MindSign) to do a neuroimaging study. Sixteen subjects underwent fMRI data acquisition while being shown audio and video of ringing iPhones. Visual and auditory cortex was active across all conditions. There was also activity in the insula. The authors interpret the sensory cortex activity as a kind of cross-modal synesthesia experience. The authors further interpret the insula activity as the subjects experiencing feelings of love and compassion. Headlines around the web ring loudly with headlines “YOU LOVE YOUR iPHONE”.

Web points of interest:

1) Read the original op-ed piece by Martin Lindstrom to give yourself some context regarding what was said and the arguments that were made. It will probably make your skin crawl with tales of babies wanting cell phones to be iPhones and terrible definitions of synesthesia. Stick with it anyway.

http://www.nytimes.com/2011/10/01/opinion/you-love-your-iphone-literally.html

2) Start at Russ Poldrack’s weblog and read his first post on the topic. He called it crap, and he was being direct and truthful.

http://www.russpoldrack.org/2011/10/nyt-editorial-fmri-complete-crap.html

3) Now read Tal Yarkoni’s excellent in-depth discussion of the problem. If you read nothing else today, go and check this one out.

http://www.talyarkoni.org/blog/2011/10/01/the-new-york-times-blows-it-big-time-on-brain-imaging/

4) Next, read the post by Vaughan Bell at Mind Hacks, which is also a nice follow-up. Double points for using the term “facepalm jamboree”.

http://mindhacks.com/2011/10/02/the-new-york-times-wees-itself-in-public/

5) Finally, see the list of people who support Poldrack’s position on the Lindstrom article. Many of the best minds in neuroscience are agreed that the Op-Ed piece is not representative of good science:

http://www.russpoldrack.org/2011/10/signers-of-letter-to-editor-of-new-york.html

To be honest, I don’t have a whole lot to add to the conversation. On the topic of reverse inference you really can’t do better than Russ Poldrack and Tal Yarkoni. The Yarkoni blog post is particularly good, effectively nuking the Lindstrom piece from orbit. It is, in a way, poetic since Poldrack and Yarkoni are working on the databases and methods that will enable probabilities to be put on arguments such as Lindstrom’s. That is, if insula activation is observed how likely is it that the emotion of ‘love’ is being experienced. To give their technology a try surf on over to http://neurosynth.org/ and check it out.

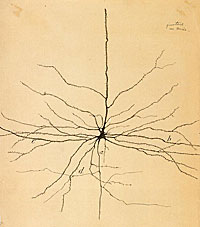

One aspect of the debate that I am particularly interested in is the purported role of the insula in the experience of love and affection. Unfortunately, Lindstrom provided very little detail in terms of the spatial location of their insula activity, effectively preventing anyone from criticizing the work on that basis. But, for the sake of argument, let’s put the insular question forward. Does it matter where in the insula that the activity was observed? The short answer is: absolutely.

There is an excellent paper by A. D. “Bud” Craig entitled “Forebrain emotional asymmetry: a neuroanatomical basis?” that details how the left and right insula have a different pattern of connectivity to the homeostatic afferents that provide information on our current body state. Craig describes how the right insula is preferentially involved in sympathetic nervous system activity geared toward engaging with the environment, energy use, and even “fight or flight” responses. Conversely, the left insula is preferentially involved in parasympathetic activity geared toward contentment, energy conservation, and “rest and digest” responses.

In our evolution, humans seem to have bolted-on social components to this underlying insular emotional asymmetry. The right insula seems to be associated with the experience of social disgust and social avoidance. This has been seen in work such as the original Philips et al. (1997) paper, showing prominent right anterior insula activity during disgust. The left insula seems to be associated with the experience of social compassion and social approach. There is less evidence for this, but meta-analyses such as Ortigue et al. (2010) have reported this pattern.

In short, leaving out which hemisphere the results occurred in is a huge faux pax on the part of Lindstrom. It is not the greatest sin of the piece, and probably not even the greatest sin of the insula argument. Still, it is certainly a prominent FAIL from the perspective of a researcher with an interest in the insula.

One final point of discussion I would like to raise is with regard to an earlier prefrontal.org post on the Seven Sins of Neuromarketing. Let’s see which ones are most prominent in the current discussion:

1) The curtain of proprietary analysis methods limits our knowledge of how effective neuromarketing can be.

We have no idea what methods Lindstrom and his colleagues used to arrive at their findings. It could be the best study in the history of ever, or it could be riddled with common statistical flaws. We have no idea because the work isn’t peer-reviewed. As before, we don’t even know where in the insula the results were located!

3) Most people’s introduction to neuromarketing is through press releases, not peer-reviewed studies.

Let’s just establish this as a rule: the New York Times editorial page is not the right place to introduce the world to your cutting-edge, unproven fMRI methods. Period. In fact, we should come up with a verb for what always happens afterward: you get Poldrack’d.

4) Neuromarketing methods are not immune to subjectivity and bias.

In a way, scientific claims are guilty until proven innocent by empirical evidence. Honestly, can I trust a man who has written books with titles like Buyology, Brandwashed, and Brand Sense to be objective with regard to a neuromarketing study with a sensational headline? If this was work was peer-reviewed then we could evaluate his evidence in a balanced manner, but an Op-Ed piece does not allow for this luxury and leaves the question of bias open.

6) People are rushing the field to make a quick buck, and not everyone is trustworthy.

I think that this represents the case in point.

Ortigue S, Bianchi-Demicheli F, Patel N, Frum C, Lewis JW. (2010). Neuroimaging of love: fMRI meta-analysis evidence toward new perspectives in sexual medicine. J Sex Med. 7(11): 3541-3552.

Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SC, Gray JA, David AS. (1997). A specific neural substrate for perceiving facial expressions of disgust. Nature. 389(6650): 495-498.

Significant Differences

One of the first things you learn in an introductory psychology class is the topic of cognitive bias. These are situations or contexts in which human beings cannot reliably make effective judgements or discriminations. For instance, information that tends to confirm our own assumptions is generally judged to be correct (Confirmation Bias). Another example is the disproportionate attention given to negative experiences relative to positive experiences (Negativity Bias). In each situation perception and decision making is distorted even though we should know better. It may be the case that we need to come up with a new bias to explain investigator behavior. Significance Bias anyone?

One of the first things you learn in an introductory psychology class is the topic of cognitive bias. These are situations or contexts in which human beings cannot reliably make effective judgements or discriminations. For instance, information that tends to confirm our own assumptions is generally judged to be correct (Confirmation Bias). Another example is the disproportionate attention given to negative experiences relative to positive experiences (Negativity Bias). In each situation perception and decision making is distorted even though we should know better. It may be the case that we need to come up with a new bias to explain investigator behavior. Significance Bias anyone?

There is a great article by Nieuwenhuis, Forstmann, and Wagenmakers in this month’s edition of Nature Neuroscience. Entitled “Erroneous analyses of interactions in neuroscience: a problem of significance”, the paper discusses the problem of how to gauge when two effects differ in neuroscience. It turns out that many papers misjudge the difference between effects by basing their judgement on significance values, even though they should know better.

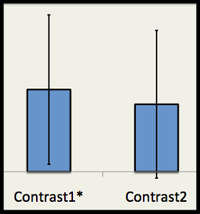

The crux of the issue is that it is improper to judge the difference between two effects by looking at their relative significance. The perceived difference between a significant effect ( i.e. p < 0.05) and non-significant effect (i.e. p > 0.05) does not necessarily mean that the two effects are themselves significantly different. You have to explicitly test for that.

In fMRI, this could mean relating one brain area that is significant to another brain area that is not significant. The temptation is to discuss the significant region as being more active than the nonsignificant region based on the fact that the latter region was below the significance threshold. This actually may or may not be the case.

Andrew Gelman and Hal Stern wrote a similar article on the problem a few years ago. The focus of their piece was simply to draw attention to the issue through the use of several theoretical and real life examples. While they were able to say that the problem existed, they were unable to say how prevalent the problem was across any particular scientific discipline. The power of the Nieuwenhuis, Forstmann, and Wagenmakers paper is that it extends the Gelman & Stern work through an analysis of the existing literature to put concrete numbers on how widespread the problem is in neuroscience.

The authors conducted a survey of 513 articles in major neuroscience journals. They identified 157 papers containing an analysis where the authors would be tempted to make an inferential error by focusing on significance. They found that in 78 out of 157 cases (50%) the authors did indeed make an error. That is far higher that I would have guessed, and one of the reasons I felt compelled to write about it today. I mean, come on, fifty percent? Really?

In the next to last paragraph the authors specifically state the the error of comparing significance levels is particularly acute in neuroimaging. From my perspective we are almost setup for failure in this regard, as significant regions are visualized as a range of attention-grabbing colors while regions that are not significant are visualized as completely blank.

I could rail on a bit longer, but that is time you could be using to go and read this article. There is a lot of good information in the text – it is short, punchy, and well worth your time.

Some additional discussion on the topic:

http://andrewgelman.com/2011/09/the-difference-between-significant-and-not-significant/

Gelman A and Stern H. (2006). The Difference Between “Significant” and “Not Significant” is not Itself Statistically Significant. The American Statistician 60(4), 328-331.

Nieuwenhuis S, Forstmann BU, and Wagenmakers EJ. (2011). Erroneous analyses of interactions in neuroscience: a problem of significance. Nature Neuroscience 14, 1105-1107.

Want your brain scanned?

Our lab is recruiting subjects for a new study of human memory across the lifespan. We are currently running our first phase of the study. If you are between the ages of 25 and 35 and live in the Santa Barbara area please read the text below and email us if you are interested. – Craig

Research Participants Wanted

The Human Memory and Neuroimaging Lab in the Department of Psychological and Brain Sciences at UCSB is seeking research participants for a functional magnetic resonance imaging (fMRI) study investigating the relationship between various personality and cognitive factors and memory. The study will take place on two separate days and will last about two hours each day. Participants will respond to questionnaires, complete cognitive tests, and have their brain activity measured using fMRI. Participants will be compensated with $20/hour and will receive an image of their brain.

To be eligible, participants must:

• Be between the ages of 25 and 35

• Be native English-speakers

• Not be pregnant

• Not have any metal in their bodies that cannot be removed

• Not be claustrophobic

Please email ucsbmemorylab@gmail.com or call (805) 283-9603 for more info.

Click here to read a PDF of our recruiting flyer.

Neuromarketing Debate, May 23rd

Do you feel like neuromarketing is a disruptive new technology, or just another example of neurohype? Regardless of where you stand on the issue you might be interested in a debate I will be participating in next Monday, the 23rd of May, at Stanford Medical School.

Do you feel like neuromarketing is a disruptive new technology, or just another example of neurohype? Regardless of where you stand on the issue you might be interested in a debate I will be participating in next Monday, the 23rd of May, at Stanford Medical School.

The Stanford Interdisciplinary Group on Neuroscience and Society (SIGNS) is hosting the debate, which is focused on neuroscience in the marketplace. Jim Sullivan, the CEO of NeuroSky, Uma Karmarkar from the Stanford Graduate School of Business, and myself will all weigh in on the topic of whether neuroscience is being used to manipulate consumers.

I think you might already know where I stand based on my ‘Seven Sins’ neuromarketing post, but the event promises to be a lively affair with a diverse array of perspectives. Come check it out if you are in the bay area next week!

Grab some details on the event, or check out the event poster for more information.

The Seven Sins of Neuromarketing

I got quoted in a random neuromarketing article recently. In the flurry of people I have been chatting with about statistics and functional neuroimaging I often neglect to ask what organizations people are associate with. In this case it was Forbes magazine.

I got quoted in a random neuromarketing article recently. In the flurry of people I have been chatting with about statistics and functional neuroimaging I often neglect to ask what organizations people are associate with. In this case it was Forbes magazine.

In the online version of the article there was a user comment from a neuromarketing company CEO defending the honor of his business and the field in which they operate. He went so far as to compare the launch of neuromarketing with the initial steps of market research in the early 20th century. He further argued that neuromarketing would bring about the next revolution in understanding consumer behavior.

I have to admit, my gut reaction on first reading this statement was one of mild disgust. This got me thinking about why neuromarketing hangs in a cloud of disdain among many scientists. Below are some of the ‘sins’ which I feel currently plague the field of neuromarketing. This is all just my opinion of course, but I do think that it raises some interesting points for discussion.

1) The curtain of proprietary analysis methods limits our knowledge of how effective neuromarketing can be.

Neuromarketing seems to be primarily driven by the private industry, not academia. This is not to say that research into consumer behavior has not occurred at the university level. There has been a lot of good neuroeconomics research in the last several years. Still, it is mostly companies in private industry that are driving the application of these findings to practical consumer behaviors. Because these companies are in competition with each other they are reluctant to give others the recipe to their secret analysis sauce. From the outside this means that the analysis pipeline of all neuromarketing companies is that of a black box, with data going in one end and the results-you-need coming out the other.

My colleagues and I have the position that fMRI research utilizing incorrect statistics can generate a large number of false positives. That is, many of the results will be there simply because of noise. Because so much of the current neuromarketing data is hidden behind the veil of proprietary analysis methods it is impossible to judge how successful their methods actually are, and to what degree their findings are false positives.

2) There is little peer-reviewed literature that is specific to neuromarketing.

Neuromarketing is an emerging discipline that will, in time, give us new insight into human behavior. Unfortunately, little peer-reviewed research has currently been published in this area. Search for ‘neuromarketing‘ in the PubMed database of abstracts (www.pubmed.com) and you will find all of ten publications. This must change for neuromarketing to mature.

Again, without peer-reviewed results on the effectiveness of neuromarketing experiments all we have to rely on are self-reports from the neuromarketing firms themselves. An issue similar to the file-drawer problem then exists. The file-drawer problem is when only positive results get published in journals while negative results sit unpublished in the file drawer. Neuromarketing companies will be likely to report positive results while negative results sit undistributed. Either way, the end result is a biased understanding.

3) Most people’s introduction to neuromarketing is through press releases, not peer-reviewed studies.

In 2006 there was an “instant-science” article released online by Marco Iacoboni et al. revealing their analysis of fMRI date obtain while subjects were watching Super Bowl advertisements. The much-discussed post, entitled “Who Really Won the Super Bowl?”, tried to determine the most effective commercial by judging which one activated regions involved in reward and empathy to the greatest degree. They determined that a commercial from Disney fared the best when evaluated by these measures. Many neuroscientists shook their heads and moved on.

In 2009 the same group published an op-ed in the New York Times detailing the results of scanning 20 individuals while looking at pictures and videos of leading political candidates. They drew conclusions on candidate evaluations by examining activity in areas like the amygdala and anterior cingulate. For example, they concluded that amygdala activity indicated a state of anxiety and cingulate activity indicated cognitive conflict. These oversimplifications were so well publicized and widely distributed that a number of leading neuroscientists were compelled to publish a letter in the New York Times calling the Iacoboni results into question.

Let’s put it this way, when many of the top minds in neuroimaging feel compelled to assemble a letter to the New York times regarding your non-peer-reviewed neuromarketing/neuropolitics results then the field has a problem.

There are a handful of peer-reviewed neuromarketing papers that do deliver. One recent paper by Michael Schaefer was a very interesting investigation into the representation of brand associations. However, these type of studies are typically rare, and it remains that the signal-to-noise ratio of information in the press is very low.

4) Neuromarketing methods are not immune to subjectivity and bias.

One of the most highly touted aspects of neuromarketing methods is that they are free from subjectivity and bias on the part of the participant. For example, asking a subject what they thought of a particular brand introduces the muddying waters of conscious consideration. The person’s response will be colored by a complex web of tangential cognitive factors and contextual biases. The promise of neuromarketing is that you can bypass these confounding factors to get at the heart of the matter – the real representation of the brand. While this is true to a degree, an entirely new set of confounding factors is introduced during the analysis of neuromarketing data.

While many neuromarketing measures are indeed more objective than verbal reports, I must disagree with the observation that they are unfiltered, true reports of the underlying representation. While the signals are not filtered by the consciousness of the research subject, a great deal of manipulation and filtering of the data is done by the researcher. This does introduce the potential for bias, simply by a different avenue.

Small changes in processing pipelines can have a huge impact on the power of fMRI to detect relevant signals. Some excellent papers by Stephen Strother come to mind with regard to this point. With no knowledge of what is going on we have no idea how objective the analyses by these companies can be.

5) The value per dollar of neuromarketing methods has yet to be determined.

Neuromarketing studies are expensive. The Forbes article says that an average EEG or fMRI marketing study costs in the neighborhood of $50,000. Immediately this number can trigger a ‘more expensive = better’ response, especially if you have a large budget to support such studies. What rarely gets discussed is what kind of value you obtain in return for the huge amount of money that is spent.

The key question in neuromarketing is what information can you get with EEG / fMRI / eye tracking / biometrics that you cannot obtain using other methods. If I can spend $1000 to do a traditional market study that gets me 85% of what a $50,000 fMRI study does then the return on my neuromarketing investment is not great. Thinking about it another way, how much less or more could I get across 50 traditional studies relative to the value of one neuromarketing study.

Many companies are not limited by the extreme cost of neuromarketing studies, and a significant fraction of them are not afraid to take the risk to try something new. Perhaps part of the motivation is also the fear of being left behind – that a competitor will take the risk and gain a competitive advantage in consumer understanding. Whatever the motivation, there will always be a market for neuromarketing methods. Still, we must still acknowledge that the value of neuromarketing is an open question.

6) People are rushing the field to make a quick buck, and not everyone is trustworthy.

The emergence of neuromarketing represents a modern day gold rush in terms of buzz and promises. Brilliant researchers will be attracted to this opportunity and will significantly advance the field of neuromarketing. Morally questionable individuals will also be drawn to the opportunity, and will end up giving the field a black eye. Reputations will build up over time and trustworthy companies will emerge from the fray, but the current situation is more akin to the wild west than a civilized exchange.

7) The true value of neuromarketing is obscured by the above-mentioned problems.

I thought I would end on a high note. There is certainly significant value to using neuromarketing methods in consumer research. Why else would companies like Nielsen Holdings be investing in neuromarketing firms like NeuroFocus? One of the biggest problems is that the true value of these methods is obscured by those who treat it as a gimmick and have the loudest voice. The next ten years will represent a true shakedown of the neuromarketing industry. Companies that are able to provide real value to their customers will live on while those who simply seek to make pretty pictures will fall by the wayside. It will be a fascinating time to be an observer of the business and politics in this emerging field.

Conclusions.

The above points ignore many other issues facing neuromarketing. I have completely bypassed a discussion of the ethics of neuromarketing. Many people worry that technologies like fMRI will help marketers find the ‘buy button’ in the brain, stripping away people’s free will in product choice. I am not terribly worried about that discussion, perhaps because I am ignoring the problem or perhaps because I know too much about brain function or neuroimaging methods. Regardless, there are other issues and hurdles that neuromarketing must address to grow as a field.

In the end I do wish neuromarketing great success. I simply fear that those individuals who are seeking to profit on the popularity will tarnish the reputation of neuromarketing before it is able to legitimize itself.

CNS 2011 Poster

I had a great time at the Cognitive Neuroscience Society meeting in San Francisco this week. My only complaint was that there wasn’t enough time in the day to catch up with all the people I wanted to see! Beyond that there were some excellent sessions on long-term memory and executive control that I got a lot out of. Overall, I felt like it was a very strong year for CNS.

I had a great time at the Cognitive Neuroscience Society meeting in San Francisco this week. My only complaint was that there wasn’t enough time in the day to catch up with all the people I wanted to see! Beyond that there were some excellent sessions on long-term memory and executive control that I got a lot out of. Overall, I felt like it was a very strong year for CNS.

Below are links to PDF and JPEG copies of the posters that were presented at the conference:

The contribution of specific functional networks to individual variability

Bennett-NetworksICA-2011.pdf

Bennett-NetworksICA-2011.jpg

Default-mode network dysfunction in psychopathic prisoners

Freeman-Psychopathy-2011.pdf

Freeman-Psychopathy-2011.jpg

PAPER: An Argument For Proper Multiple Comparisons Correction

It has been a long road, but our multiple comparisons paper including the salmon has been published. See below for more details, including the abstract and a link to the download page of the journal. If you have any questions or comments please post them below or send me an email directly.

Neural Correlates of Interspecies Perspective Taking in the Post-Mortem Atlantic Salmon: An Argument For Proper Multiple Comparisons Correction

Craig M. Bennett(1), Abigail A. Baird(2), Michael B. Miller(1) and George L. Wolford(3)

1)Department of Psychology, University of California at Santa Barbara, Santa Barbara, CA 93106

2)Department of Psychology, Blodgett Hall, Vassar College, Poughkeepsie, NY 12604

3)Department of Psychological and Brain Sciences, Moore Hall, Dartmouth College, Hanover, NH 03755

Journal of Serendipitous and Unexpected Results, 2010. 1(1):1-5

Early Access: Oct 20, 2010

With the extreme dimensionality of functional neuroimaging data comes extreme risk for false positives. Across the 130,000 voxels in a typical fMRI volume the probability of at least one false positive is almost certain. Proper correction for multiple comparisons should be completed during the analysis of these datasets, but is often ignored by investigators. To highlight the danger of this practice we completed an fMRI scanning session with a post-mortem Atlantic Salmon as the subject. The salmon was shown the same social perspective-taking task that was later administered to a group of human subjects. Statistics that were uncorrected for multiple comparisons showed active voxel clusters in the salmon’s brain cavity and spinal column. Statistics controlling for the family-wise error rate (FWER) and false discovery rate (FDR) both indicated that no active voxels were present, even at relaxed statistical thresholds. We argue that relying on standard statistical thresholds (p < 0.001) and low minimum cluster sizes (k > 8) is an ineffective control for multiple comparisons. We further argue that the vast majority of fMRI studies should be utilizing proper multiple comparisons correction as standard practice when thresholding their data.

Download a PDF of the article here:

http://prefrontal.org/files/papers/Bennett-Salmon-2010.pdf

Riverside Presentation Slides

Just wanted to take a second to thank the kind folks in the Psychology Department at UC Riverside for hosting me this afternoon. I gave a neuroimaging stats talk for their cognitive brown bag series, and it was a really great time!

Just wanted to take a second to thank the kind folks in the Psychology Department at UC Riverside for hosting me this afternoon. I gave a neuroimaging stats talk for their cognitive brown bag series, and it was a really great time!

For anyone who is interested a copy of the slides from my presentation can be downloaded at the link below. If you have any questions or comments feel free to email me – I would love to chat more. Take care UCR!

http://prefrontal.org/files/presentations/Bennett-Riverside-2010.pdf

Spring 2010 Conference Posters

I have been remiss in uploading copies of my spring conference posters. October seems like a fine month to rectify that. Below are links to the research I presented at the Cognitive Neuroscience Society meeting in Montreal and at the Organization for Human Brain Mapping meeting in Barcelona. Both meetings were fantastic – I got to meet a lot of new people and experience all the awesomeness that Montreal and Barcelona have to offer.

* How reliable are the results from fMRI?

Conference Poster: [PDF] [JPEG]

* A device for the parametric application of thermal and

tactile stimulation during fMRI

Conference Poster: [PDF] [JPEG]

“MATLAB code practices that make me cry”

Great post from Doug Hull at MathWorks on the Top 10 MATLAB programming practices that only lead to consternation and the regret.

Great post from Doug Hull at MathWorks on the Top 10 MATLAB programming practices that only lead to consternation and the regret.

It is a fun read:

http://blogs.mathworks.com/videos/2010/03/08/

top-10-matlab-code-practices-that-make-me-cry/

Personally, I have often been guilty of #9 and #3…