Significant Differences

One of the first things you learn in an introductory psychology class is the topic of cognitive bias. These are situations or contexts in which human beings cannot reliably make effective judgements or discriminations. For instance, information that tends to confirm our own assumptions is generally judged to be correct (Confirmation Bias). Another example is the disproportionate attention given to negative experiences relative to positive experiences (Negativity Bias). In each situation perception and decision making is distorted even though we should know better. It may be the case that we need to come up with a new bias to explain investigator behavior. Significance Bias anyone?

One of the first things you learn in an introductory psychology class is the topic of cognitive bias. These are situations or contexts in which human beings cannot reliably make effective judgements or discriminations. For instance, information that tends to confirm our own assumptions is generally judged to be correct (Confirmation Bias). Another example is the disproportionate attention given to negative experiences relative to positive experiences (Negativity Bias). In each situation perception and decision making is distorted even though we should know better. It may be the case that we need to come up with a new bias to explain investigator behavior. Significance Bias anyone?

There is a great article by Nieuwenhuis, Forstmann, and Wagenmakers in this month’s edition of Nature Neuroscience. Entitled “Erroneous analyses of interactions in neuroscience: a problem of significance”, the paper discusses the problem of how to gauge when two effects differ in neuroscience. It turns out that many papers misjudge the difference between effects by basing their judgement on significance values, even though they should know better.

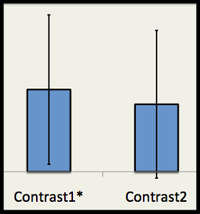

The crux of the issue is that it is improper to judge the difference between two effects by looking at their relative significance. The perceived difference between a significant effect ( i.e. p < 0.05) and non-significant effect (i.e. p > 0.05) does not necessarily mean that the two effects are themselves significantly different. You have to explicitly test for that.

In fMRI, this could mean relating one brain area that is significant to another brain area that is not significant. The temptation is to discuss the significant region as being more active than the nonsignificant region based on the fact that the latter region was below the significance threshold. This actually may or may not be the case.

Andrew Gelman and Hal Stern wrote a similar article on the problem a few years ago. The focus of their piece was simply to draw attention to the issue through the use of several theoretical and real life examples. While they were able to say that the problem existed, they were unable to say how prevalent the problem was across any particular scientific discipline. The power of the Nieuwenhuis, Forstmann, and Wagenmakers paper is that it extends the Gelman & Stern work through an analysis of the existing literature to put concrete numbers on how widespread the problem is in neuroscience.

The authors conducted a survey of 513 articles in major neuroscience journals. They identified 157 papers containing an analysis where the authors would be tempted to make an inferential error by focusing on significance. They found that in 78 out of 157 cases (50%) the authors did indeed make an error. That is far higher that I would have guessed, and one of the reasons I felt compelled to write about it today. I mean, come on, fifty percent? Really?

In the next to last paragraph the authors specifically state the the error of comparing significance levels is particularly acute in neuroimaging. From my perspective we are almost setup for failure in this regard, as significant regions are visualized as a range of attention-grabbing colors while regions that are not significant are visualized as completely blank.

I could rail on a bit longer, but that is time you could be using to go and read this article. There is a lot of good information in the text – it is short, punchy, and well worth your time.

Some additional discussion on the topic:

http://andrewgelman.com/2011/09/the-difference-between-significant-and-not-significant/

Gelman A and Stern H. (2006). The Difference Between “Significant” and “Not Significant” is not Itself Statistically Significant. The American Statistician 60(4), 328-331.

Nieuwenhuis S, Forstmann BU, and Wagenmakers EJ. (2011). Erroneous analyses of interactions in neuroscience: a problem of significance. Nature Neuroscience 14, 1105-1107.

Leave a Reply

You must be logged in to post a comment.