The Dangers of Double Dipping (Voodoo IV)

A new article discussing non-independence errors has arrived on the scene, and it is quite good. Nikolaus Kriegeskorte, Kyle Simmons, Patrick Bellgowan, and Chris Baker have authored a Nature Neuroscience paper called ‘Circular analysis in systems neuroscience: the dangers of double dipping‘. It is the same fundamental argument as the original Voodoo Correlations paper (now renamed Puzzlingly high correlations in fMRI studies of emotion, personality, and social cognition), but in a more generalized and effective form.

A new article discussing non-independence errors has arrived on the scene, and it is quite good. Nikolaus Kriegeskorte, Kyle Simmons, Patrick Bellgowan, and Chris Baker have authored a Nature Neuroscience paper called ‘Circular analysis in systems neuroscience: the dangers of double dipping‘. It is the same fundamental argument as the original Voodoo Correlations paper (now renamed Puzzlingly high correlations in fMRI studies of emotion, personality, and social cognition), but in a more generalized and effective form.

Kriegeskorte leads off with a large-scale evaluation of fMRI studies in Nature, Science, Nature Neuroscience, Neuron, and Journal of Neuroscience. They were looking specifically at the prevalence of non-independent analyses in these journals. Out of 134 fMRI papers 42% had at least one non-independent analysis. That is a sizable number of studies in the highest-impact journals and demonstrates right away that circular analysis is a widespread issue.

The authors later provide several good examples of why circular, non-independent analysis is dangerous. The first example is through the lens of pattern-analysis in fMRI. They describe how it is common practice to do training and testing of classifiers on separate data sets because of overfitting. Overfitting is picking up on noise in the data during training of the classifier. When using a single randomized dataset for training and testing a linear classifier can approach 100% accuracy. When trained on one random data set and tested on another this percentage falls to approximately chance (50%). The same effects that lead to classifier overfitting can influence non-independent analyses.

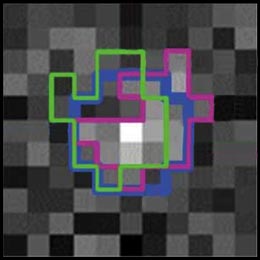

The second example was a ROI analysis of simulated fMRI data. They demonstrated (as shown by the figure above) that noise at the fringes of an ROI can influence the shape of the ROI, and therefore the statistics used to later characterize it. The noise almost always leads to improved statistics and inflated effect sizes. This was the most intuitive figure I have seen describing the effect of non-independence.

One strength of this paper is that it discusses shortcomings that are common to functional imaging without being heavy handed. The authors spend a fair amount of text at the beginning and end listing how many systems neuroscience methods engage in circular analysis, such as the identification of neurons with specific response properties or gene selection in microarray studies. They also never called anyone out by name, instead using their own data to provide examples of non-independent analysis.

In my mind the best part of the paper is the decision tree the authors provide for determining if you are conducting a circular, non-independent analysis. It leads you through your analysis, step-by-step, to help guide you toward methods of keeping your analyses independent. Brilliant.

If you do grab a copy of this paper to read then, by all means, also download a PDF of the supplementary materials. It is even better than the paper, trust me. There is a 32-point, FAQ-style discussion of data independence that represents the absolute best tutorial on the topic that I have seen. Some parts are quite technical, but the majority of their answers are straightforward in providing a ‘yes’ or ‘ no’.

In conclusion, the authors argue that non-independent analyses should not be acceptable in scientific publications and that a large number of papers may need to be reanalyzed or replicated. This is the same conclusion of Vul et al. in the Puzzlingly High Correlations article. In comparing the two papers I think that Kriegeskorte was more effective in arguing in its favor. Puzzlingly High Correlations was a real wake-up call for functional neuroimaging and had all the drama of naming names and pointing fingers at social neuroscience. Still, I am drawn to Kriegeskorte’s approach. With far less consternation he describes how many fields have circular analysis problems, identifies what issues circular analysis causes, and provides a large amount of detail on how to avoid it in the future.

3 Responses to “The Dangers of Double Dipping (Voodoo IV)”

Shen-Mou Hsu - July 9th, 2009

Hi,

Your blog is very informative. There is one thing I do not quite understand in the article. In example 2, the authors argued that non-independent data led to an overfitted ROI as some voxels at the fringe were included and excluded, but at a closer look, this also applied to the ROI derived by independent data. Could you shed some light on this?

Thanks

Shen-Mou

You are correct that the ROI derived from the independent data does not exactly match the true ROI either. The benefit of an independent analysis is that when you test that region on a second, independent dataset it is unlikely that those same fringe voxels will be significant due to noise. Relative to a non-independent analysis you will have a much better estimate of the true value of the region. Hopefully that helps a bit. ~ Craig

‘Voodoo Correlations in Social Neuroscience’ « The Amazing World of Psychiatry: A Psychiatry Blog - July 18th, 2009

[…] 4th Prefrontal Blog article […]

What are the limits of neuroscience? – Neurologism - March 29th, 2015

[…] The Dangers of Double Dipping (Voodoo IV) […]

Leave a Reply

You must be logged in to post a comment.