Voodoo Correlations in Social Neuroscience

The progress of science is not a continuous, linear process. Instead it moves forward in fits and starts, occasionally under protest. For a young field cognitive neuroscience has made dramatic advances in a relative short amount of time, but there have been some mistakes that we keep making again and again. Sometimes we get so set in our methods that it requires a major wake up call to get us off our asses. The recent paper by Vul, Harris, Winkielman, and Pashler (in press) represents just that: a call to action to change one particular old, bad habit.

Update: download the manuscript for free at:

http://edvul.com/pdf/Vul_etal_2008inpress.pdf.

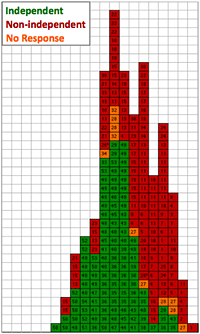

The paper highlights an issue in the relation of neuroimaging data to measures of behavior often found in social and cognitive neuroscience. They found that some researchers do one correlation analysis against all the voxels in the brain (~160,000+) to find those that are related to their measure of behavior [sin #1]. They then report a second correlation, this time just between a selected set of voxels and their measure of behavior[sin #2]. These voxels are often selected on the basis of a strong correlation in the first analysis. This ‘non-independence error’ causes the second correlation estimate to be dramatically inflated, leading to results that may simply represent noisy data.

Sin #1 is a multiple comparisons problem. Vul et al. do not dwell on this error, but it is an issue that is pervasive in neuroimaging. The problem is this: when looking for a relationship between 160,000 voxels and ANYTHING you are likely to get a few significant results simply by random chance. Expanding on the example in the Vul et al. paper, I could correlate the daily temperature reading in Lawrence, Kansas with a set of fMRI data gathered in Santa Barbara, California and I would find a few significant voxels, guaranteed. Does this mean that the temperature in Kansas is related to brain activity? No, most certainly not. Most people try to account for this issue by having an arbitrarily high significance level and some form of minimum cluster volume. This constrains the results to those that are extra potent and have a certain minimum size. While it is a step in the right direction, this method remains a weak control for multiple comparisons. Instead, false discovery rate (FDR) and familywise error correction (FWE) are both excellent options for the correction of multiple comparisons. Still, many people ignore these methods in favor of uncontrolled statistics.

Sin #2 is the non-independence error. Bottom line: you cannot use the data to tell you where to look for results and then use the same data to calculate your results. That is exactly what many, sometimes very prominent, researchers did with their data. They did an initial correlation analysis to find what brain areas are interesting, and then took the data from those areas and did a second analysis to calculate final correlation value. Not surprisingly, the resulting values from this method were sky-high. This ‘non-independence error’ is the cardinal sin described by the Vul et al. paper. The error leads to values that are statistically impossible based on the reliability of behavioral measures and the reliability of fMRI. In arguing this fact Vul et al. has called into question the results from a number of papers in very prominent journals.

I have been reading many weblog articles responding to Vul et al. over the last week. Many of them were arrayed squarely against neuroimaging, citing the results as evidence that cognitive neuroscience is on a shaky foundation. This is certainly not the case. Functional neuroimaging is still our best option for non-invasively investigating brain function. I see this paper as the continued natural evolution of a still relatively young field. When we, as a field, first approached the question of relating brain function and behavior we all did our best to find a good solution to the problem. It turns out that for many researchers their initial solution was suboptimal and flawed. Vul et al. have pointed this out, as have many others. From this point forward it is going to be nearly impossible to make non-independence errors and get the results published. Thus, science has taken another step forward. A lot of toes got stepped on in the process, but it was something that had to happen sooner or later.

Now if we can just get people to correct for multiple comparisons we can call 2009 a success.

– Vul E, Harris C, Winkielman P, and Pashler H. (in press). Voodoo Correlations in Social Neuroscience. Perspectives in Psychological Science.

8 Responses to “Voodoo Correlations in Social Neuroscience”

Matt - January 29th, 2009

We were invited to publish a reply to Vul in the same issue of the journal. Here is a link to what we have submitted. Bottom line – among the many errors in the Vul paper – they mischaracterized the critiqued studies. Nobody runs their analyses the way Vul suggests and they don’t have a non-independence problem.

http://www.scn.ucla.edu/pdf/LiebermanBerkmanWager(invitedreply).pdf

‘Voodoo Correlations in Social Neuroscience’ « The Amazing World of Psychiatry: A Psychiatry Blog - January 30th, 2009

[…] blog article (also […]

Deception Blog / Voodoo science in fMRI and voice analysis to detect deception: compare and contrast - February 1st, 2009

[…] Correlations in Social Neuroscience [pdf]. If not, you’ll find the detail in coverage all over the psych and neuroblogs by googling the title or simply “voodoo […]

Neuroskeptic - February 5th, 2009

Regarding “Sin #1” (I like that!) it’s worth noting that Vul et al. specifically discuss a problem with multiple-comparisons testing which they say stems from a failure to read a paper.

If they’re right, many fMRI results could be spurious. But they only name one paper as a victim of this mistake although they say there are others.

This is a completely different argument to their more widely discussed one about “non-independence”. See my post for more…

prefrontal - February 6th, 2009

Neuroskeptic,

My grad school adviser and I did a department lecture series on multiple comparisons correction a few years ago. We looked at the Forman et al. (1995) paper with interest since it lays out how the effective significance value changes with varying values of minimum cluster size. The only problem is that Forman did the testing in two dimensions, not three. This makes the utility to fMRI virtually nil since the results do not generalize to 3D volumes of data. That is where Eisenberger got into trouble in the Vul paper – they said their effective significance for their 3D data was p < 0.000001 based on the 2D estimates. Adding a cluster size threshold does increase the effective significance value, it is just that nobody knows by how much. It is also not a strong control for the multiple comparisons problem, which is what Vul's argument was. A thorough investigation of the issue is necessary, it just seems like nobody has stepped up to the plate yet. Cheers, Craig [Prefrontal]

Neuroskeptic - February 7th, 2009

Right – that’s what I thought. Although you’re clearly the expert here! Personally, I think that the “Forman error” is more serious, and more important, than the non-independence error. A result due to the non-independence error may be inflated, but a result due to the Forman error could be entirely illusory.

prefrontal - February 8th, 2009

Neuroskeptic,

The multiple comparisons problem is still a huge issue in neuroimaging, you are right. I think that part of the problem we are dealing with is an issue of tradition. For the last 10-15 years a significance cutoff of p < 0.001 and an extent threshold of 8 voxels was 'good enough' to deal with the multiple comparisons problem. I think it is generally accepted that this is a poor control, but it's hard to change a well-entrenched habit. That was the magic of the Vul et al. paper: by pointing fingers and naming names they forced the field to debate and confront the problem. We are in the process of getting a paper out that focuses on the multiple comparisons problem. I will send you a copy when we have an accepted draft - it should be a fun read. Best, Craig [Prefrontal]

Justin - January 1st, 2010

Hi Craig,

Happy New Year!

Any chance of some constructive feedback on this video I put together on the above study

Regards

Justin

Leave a Reply

You must be logged in to post a comment.